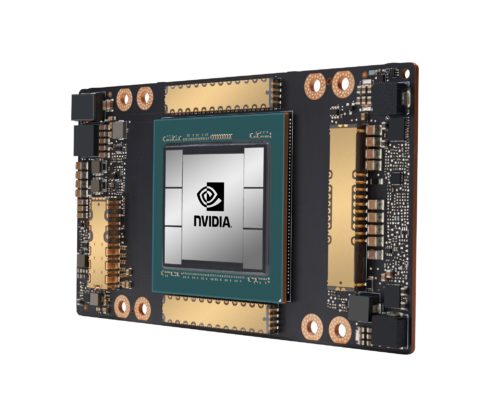

Nvidia’s first GPU based on the Nvidia Ampere architecture is now available. The GPU, Nvidia A100, helps unify AI training and inference. It is optimized for use cases such as data analytics, scientific computing, and cloud graphics.

It offers a 20x increase in performance over previous Nvidia GPUs. According to the company, this release is the biggest leap in performance over the past eight generations of GPUs.

RELATED CONTENT: Nvidia releases GPU-accelerated app framework for conversational AI services

The Nvidia A100 architecture allows it to offer right-sized computing power. Its multi-instance capability enables it to be partitioned into up to seven independent instances. In addition, using Nvidia NVLink- interconnect technology, multiple A100s can be linked together to operate as one GPU for larger tasks, the company explained.

“The powerful trends of cloud computing and AI are driving a tectonic shift in data center designs so that what was once a sea of CPU-only servers is now GPU-accelerated computing,” said Jensen Huang, founder and CEO of NVIDIA. “NVIDIA A100 GPU is a 20x AI performance leap and an end-to-end machine learning accelerator — from data analytics to training to inference. For the first time, scale-up and scale-out workloads can be accelerated on one platform. NVIDIA A100 will simultaneously boost throughput and drive down the cost of data centers.”

According to Nvidia, there are several major technology companies that plan to incorporate A100s into their hardware offerings, including Alibaba Cloud, Amazon Web Services (AWS), Atos, Baidu Cloud, Cisco, Dell Technologies, Fujitsu, GIGABYTE, Google Cloud, H3C, Hewlett Packard Enterprise (HPE), Inspur, Lenovo, Microsoft Azure, Oracle, Quanta/QCT, Supermicro and Tencent Cloud.