It is no longer possible to instrument the world.

Over the past 20 years, there has been an explosion of software development. With that growing interest has come an ever-diversifying, fractal landscape of widely shared software libraries. Applying these shared libraries makes up most of modern programming.

This has been a wonderful experience, but there’s a catch. Observing modern applications now means obtaining data from a constantly expanding list of programming languages, application frameworks, message queues, platforms, and runtimes. All interconnected.

To observe our systems properly, these shared libraries must produce observations. Either this happens innately, as a feature provided by the library, or it must be added later as ad hoc instrumentation.

Today, even though they are heavily relied upon, almost no shared library includes any form of instrumentation. Certainly not instrumentation that would be coherent with the instrumentation any other library might provide. So ad hoc is what we have been stuck with.

When we don’t have an option, we muddle. Traditionally, this has meant that if you wanted to observe the world of software, you inevitably had to roll up your sleeves and re-instrument that world. That used to work. Today, the cost is untenable. Monitoring systems can no longer find success by focusing on a small handful of application frameworks. The world has moved past that.

In response, we realized that we were over the muddle, and it was time to get organized.

- Develop a common language for describing the operations of distributed systems.

- Pool our resources as a community, and maintain a shared repository of clients and instrumentation packages.

- Provide a safe mechanism for widely shared software libraries to ship with native instrumentation, alleviating the need for third-party instrumentation.

That realization came in 2016, four years ago. Two projects were started in response. Two years later, they merged to form a single standard.

Reducing standards

Knowing that it would set the entire effort back a year, we chose to merge OpenTracing and OpenCensus. From a distance, it would be easy to see the creation of OpenTelemetry and ask “another standard? Where does it end?” The xkcd comic is usually flashed at this point; now there are 15 competing standards. So why do it?

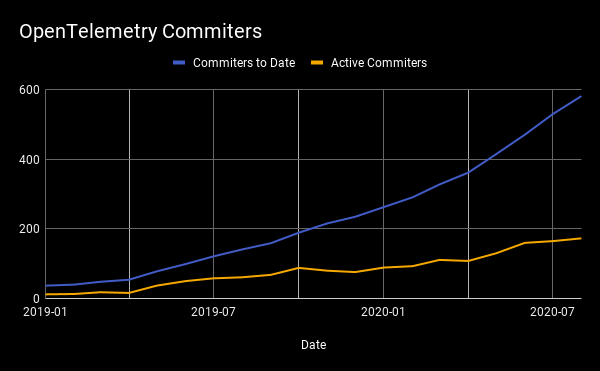

To answer that, you have to join the community. Because up close, the view could not be more different. Before there were two competing projects, now there is one single standard. This was a real change, and it had a substantial impact on both perception and participation. Participation spiked — total contributions quickly surpassed both preceding projects. We didn’t go from fourteen to fifteen, we went from two to one.

Ultimately, standards are not an act of coding, they are an act of organizing. For the past four years, I have focused my organizing on building the trust needed to make this standard happen. A spec on its own is not enough.

For a real standard to emerge, everyone must either be directly involved or accepting of the results. Otherwise, by definition, it would not be a standard. When people ask me “Why did these two projects merge?” I like to answer, “Because that was the point.”

“Sandbox projects have jumped in usage by 238%. The top three projects being evaluated by respondents are OpenTelemetry (20%), Service Mesh Interface (14%) and OpenMetrics (14%).”

– CNCF, 2020 Survey

Readying Open Telemetry

Today marks two years since we solidified into a single effort, and the OpenTelemetry project is preparing for its first stable release. Industry participation is nearly universal. All major infrastructure providers, plus every major vendor, are now core contributors. Huge applications, such as Shopify, are both building and using OpenTelemetry in production. OpenTelemetry is the largest and most active project within the CNCF, after Kubernetes.

As we become stable, monitoring and observability systems are looking at retiring their current instrumentation and transitioning to OpenTelemetry as their recommended client architecture.

This is incredibly noteworthy. Instrumentation is a critical part of an observability system – ultimately, a data product is only as good as its data. To switch away from a solution where you have complete control to one where you must share the decision making with others is a real act of trust. And yet, several major vendors have already announced they are doing precisely that.

A prediction: every future observability system will leverage OpenTelemetry instrumentation as its starting point. Why recreate all of that effort, when you could be focused on shipping features?

OpenTelemetry is here to stay.