The eBPF-based observability provider groundcover today announced an observability solution specifically for monitoring LLMs and agents.

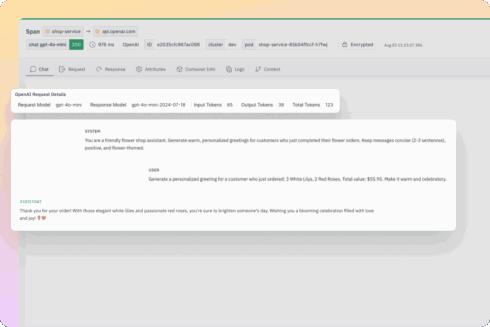

It captures every interaction with LLM providers like OpenAI and Anthropic, including prompts, completions, latency, token usage, errors, and reasoning paths.

According to groundcover, while LLMs can offer many benefits, they also introduce a lot of negatives: performance volatility, unpredictable ROI, quality drift, and introduction of security and compliance risks.

“What emerges is a paradox: organizations are rushing to productionize LLM workflows because of their immense value, but they are doing so without the observability guardrails that modern engineering teams take for granted in every other part of the stack,” groundcover wrote in a blog post.

Because groundcover uses eBPF, it is operating at the infrastructure layer and can achieve full visibility into every request. This allows it to do things like follow the reasoning path of failed outputs, investigate prompt drift, or pinpoint when a tool call introduces latency.

“LLM responses rarely fail in isolation. groundcover captures the complete execution context behind every request including prompts, response payloads, tool usage, and session history so you can trace quality issues back to their source. Whether you’re debugging hallucinations, drift, or inconsistent output, you’ll see not just what went wrong, but how and why it happened. This context is key to maintaining output quality in real-world, production environments,” the company said.

All observability data stays within the customer’s cloud environment, including sensitive prompts and responses. LLM observability is available for free for all groundcover customers.