Espresso AI has released a Kubernetes Scheduler for Snowflake to enable dynamic scheduling between warehouses.

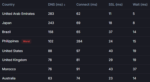

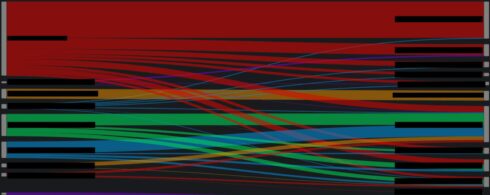

When a query is sent to Snowflake, the scheduler will intelligently re-route it to the appropriate cluster based on its required computing resources.

By default, compute scheduling in Snowflake is static, meaning that each workload is assigned to a specific warehouse and will always run there. According to Espresso AI, the idea with the Kubernetes Scheduler is to separate logical and physical compute so that requests can be routed to any warehouse, rather than having a fixed warehouse for each workload, which can lead to fragmentation, underutilization, and increased costs.

In instances where no existing warehouse is able to take on the request, Espresso AI can automatically spin up a new warehouse and spin it back down when it’s not needed.

It also uses reinforcement learning to become better over time, learning how to queue jobs for smarter packing, anticipate spikes in resource needs, upsize queries when space is available so that it can finish jobs faster, and balance job latency with queue latency.

Kubernetes Scheduler for Snowflake is now generally available, and the company says it could result in a 40% reduction in Snowflake costs.

“We’re threading the needle by dynamically allocating workloads – our Kubernetes scheduler will route queries based on available capacity so that you don’t unnecessarily spin up new clusters,” said Ben Lerner, CEO and co-founder of Espresso AI. “You can think of it as Uber Pool for your queries – if there’s room in a warehouse that’s already running, we make sure you run your workloads there and utilize the compute you’re already paying for.”