The recent publication by the National Institute of Standards and Technology (NIST) and their partners highlights a significant vulnerability in AI/ML systems: the susceptibility to adversarial attacks.

NIST states that the challenge is that training data for AI systems may not always be reliable in a blog post. The data often comes from online sources or interactions with the public, which are susceptible to manipulation. This vulnerability creates opportunities for malicious actors to “poison” the AI training process. Such interference can occur both during the initial training period and later, as the AI system continues to adapt and refine its behavior based on ongoing interactions with the real world.

The impact of corrupted training data is particularly evident in AI applications like chatbots. When these systems are exposed to manipulated inputs or maliciously crafted prompts, they may learn and replicate undesirable behaviors, such as responding with abusive or racist language. This problem underscores the challenge of ensuring that AI systems are trained with trustworthy data and highlights the need for robust mechanisms to safeguard AI training and operational processes against such adversarial influences.

“This is the best AI security publication I’ve seen. What’s most noteworthy are the depth and coverage. It’s the most in-depth content about adversarial attacks on AI systems that I’ve encountered. It covers the different forms of prompt injection, elaborating and giving terminology for components that previously weren’t well-labeled. It even references prolific real-world examples like the DAN (Do Anything Now) jailbreak, and some amazing indirect prompt injection work. It includes multiple sections covering potential mitigations, but is clear about it not being a solved problem yet. It also covers the open vs closed model debate,” said Joseph Thacker, principal AI engineer and security researcher at AppOmni, a SaaS security company. “There’s a helpful glossary at the end, which I personally plan to use as extra “context” to large language models when writing or researching AI security. It will make sure the LLM and I are working with the same definitions specific to this subject domain. Overall, I believe this is the most successful over-arching piece of content covering AI security.”

In their work, titled “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations”, the team categorizes various types of adversarial attacks and offers strategies to mitigate them. This publication is part of NIST’s larger initiative to foster the development of trustworthy AI. It aims to guide AI developers and users in understanding potential attacks and preparing for them, while acknowledging that a perfect solution or “silver bullet” to completely eliminate such vulnerabilities does not exist.

The report outlines four major types of attacks:

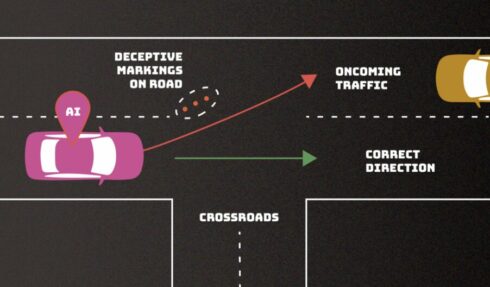

- Evasion Attacks: These attacks occur after AI deployment, involving the alteration of inputs to mislead the AI. For example, changing road signs to confuse an autonomous vehicle.

- Poisoning Attacks: Happening during the AI’s training phase, these attacks introduce corrupted data to skew the AI’s learning. An example is adding inappropriate language to a chatbot’s training data.

- Privacy Attacks: Occurring during AI deployment, these attacks aim to extract sensitive information about the AI or its training data, often through reverse engineering.

- Abuse Attacks: These involve inserting incorrect information into sources the AI uses post-deployment, leading the AI to absorb and act on false data.

“Most of these attacks are fairly easy to mount and require minimum knowledge of the AI system and limited adversarial capabilities,” said co-author Alina Oprea, a professor at Northeastern University. “Poisoning attacks, for example, can be mounted by controlling a few dozen training samples, which would be a very small percentage of the entire training set.”