Secure Code Warrior is trying to provide organizations with greater visibility and control over developers’ use of AI coding tools with the launch of its new solution, Trust Agent: AI.

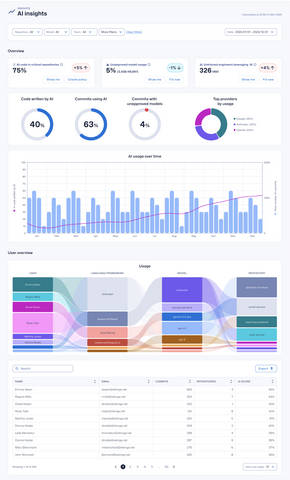

This new offering tracks key signals that provide insights into how AI usage impacts software development risks. These include things like AI coding tool usage, vulnerability data, code commit data, and developer secure coding skills.

“AI allows developers to generate code at a speed we’ve never seen before,” said Pieter Danhieux, co-founder and CEO of Secure Code Warrior. “However, using the wrong LLM by a security-unaware developer, the 10x increase in code velocity will introduce 10x the amount of vulnerabilities and technical debt.

According to Secure Code Warrior, what makes its solution unique is that it evaluates the relationship between the developer, the models they use, and the repository where AI-generated code is committed.

It offers governance at scale through capabilities such as identification of unapproved LLMs, analysis of how much code is AI-generated, and flexible policy controls to log, warn, or block pull requests from developers who are using unapproved tools or developers who have insufficient knowledge on secure coding best practices.

“Trust Agent: AI produces the data needed to plug knowledge gaps, filter security-proficient developers to the most sensitive projects, and, importantly, monitor and approve the AI tools they use throughout the day,” said Danhieux. “We’re dedicated to helping organizations prevent uncontrolled use of AI on software and product security.”

Trust Agent: AI is currently available in beta, and general availability is planned for sometime in 2026.